Well, after a few years of absence, I’ll try to write here once in a while.

Something about cloud security… mainly Microsoft technology stack… as that is what I am most familiar with 🙂

See you soon!

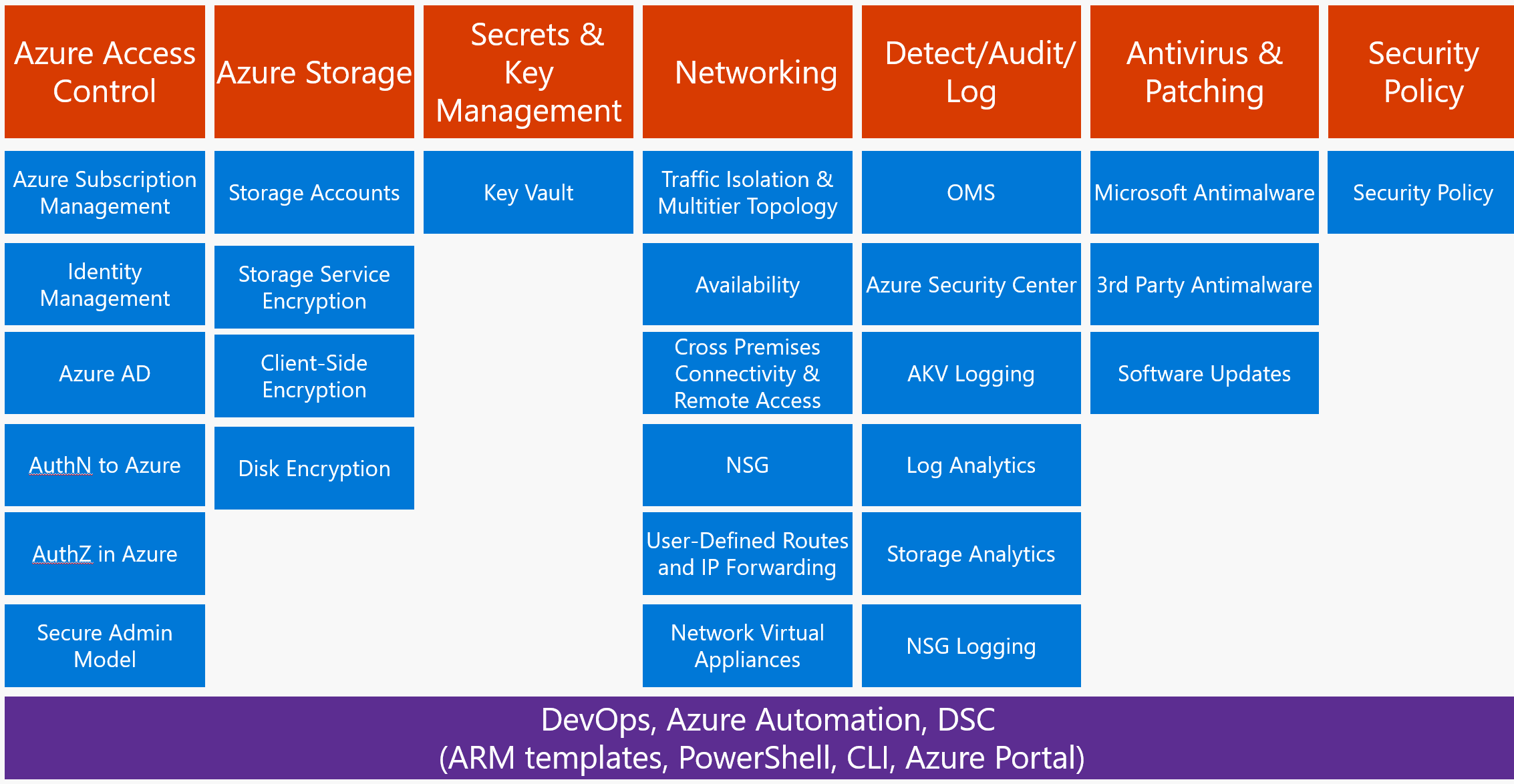

Hi there, in my last post (yes, more than 15 month ago), I started describing overall framework that can be used to securely design and implement applications in Azure.

Secure administration is one of the foundation blocks of overall security for applications deployed into Azure. Over the next few blog posts we’ll discuss different aspects of secure administration over Azure resources and some ideas on how to make it all work.

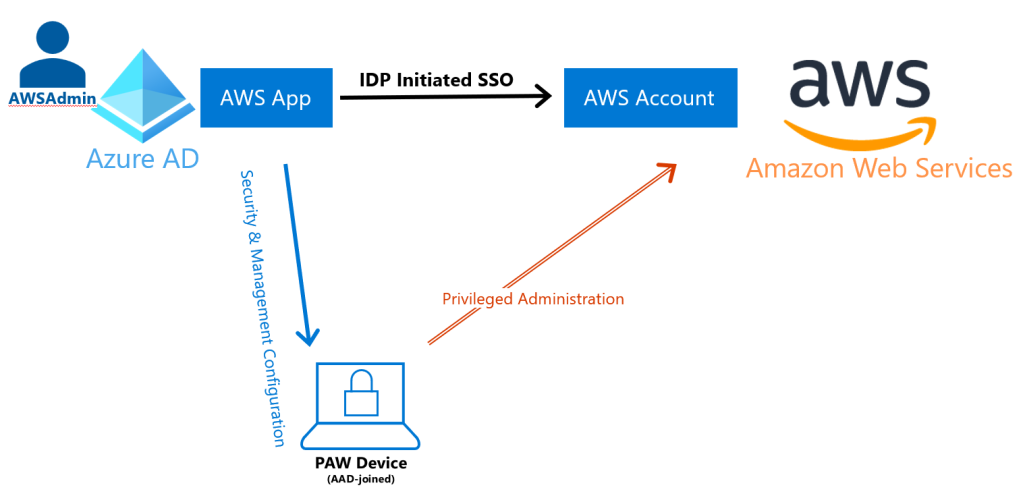

One of the main principals of secure administration is trust in user identity that is used to perform administration over resources. This trust assures us that identity is not compromised and is not subject to compromise by the bad guys. To have this trust we need to have a solid administrative architecture that ensures that privileged accounts can be used only from approved, properly managed devices with no or very limited attack surface. The goal of such architecture is to ensure that trusted identity can be used only from managed trusted devices and its use on any other device would be denied.

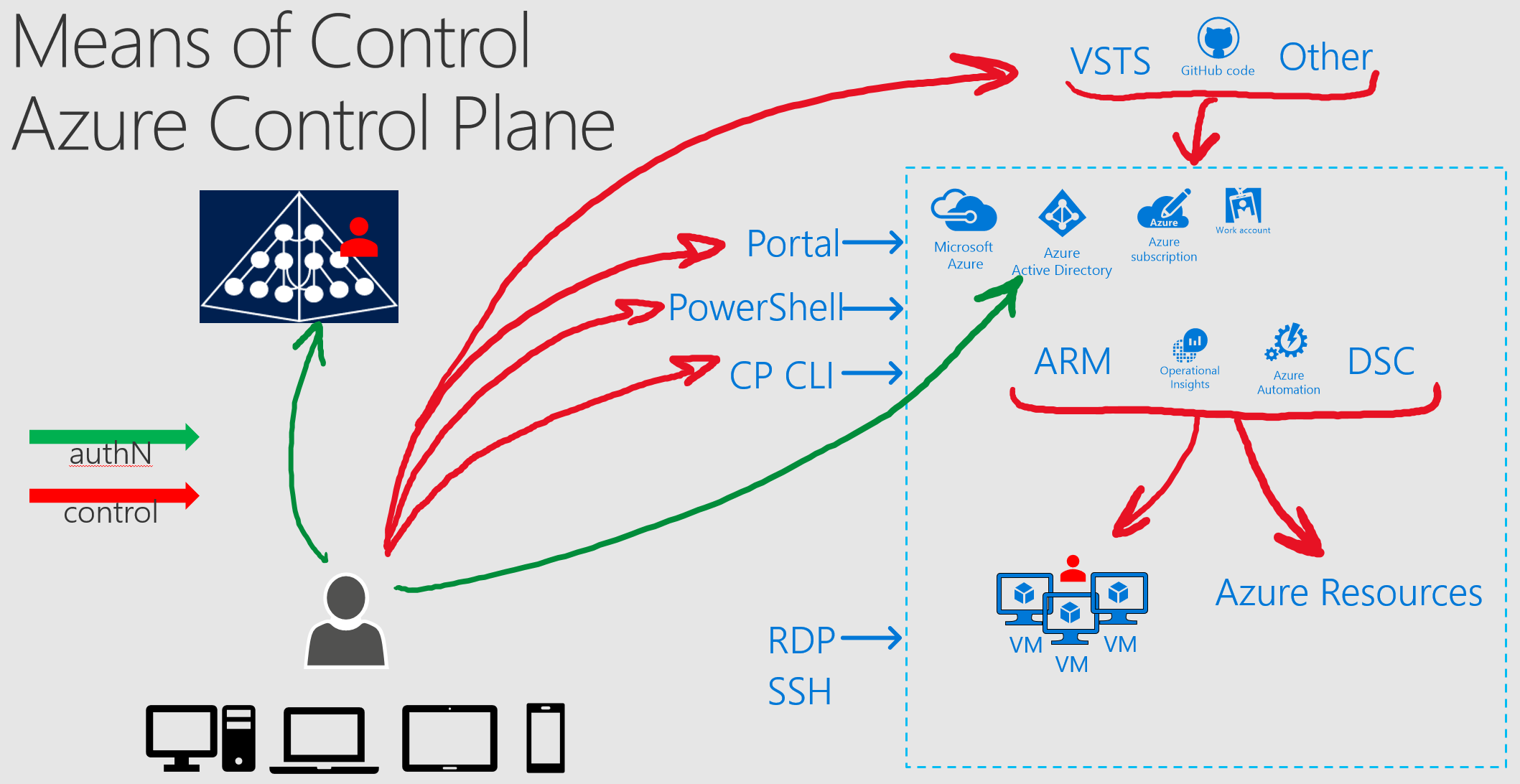

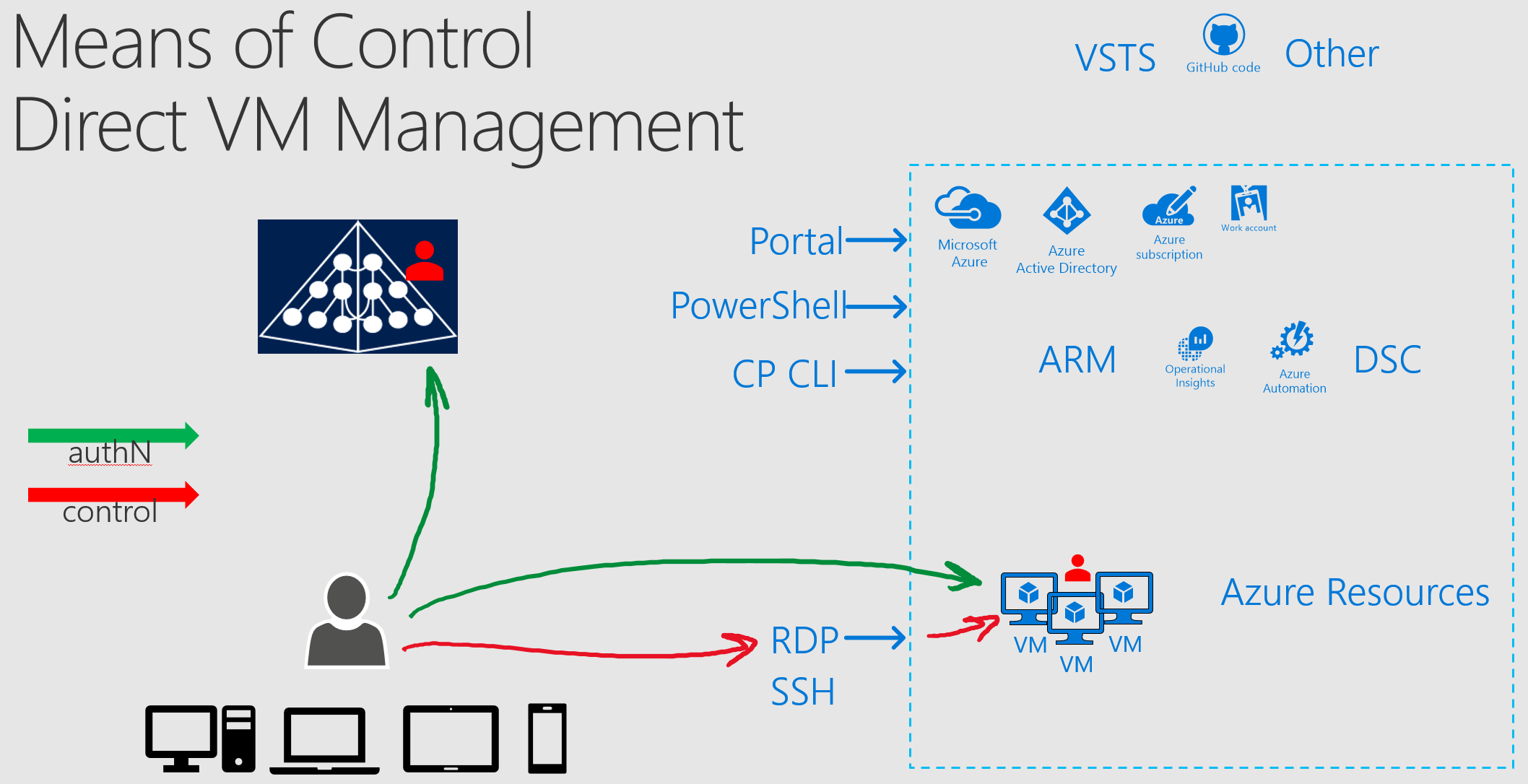

Before we can look at different administration models of Azure based resources, we need to understand how resources in Azure can be controlled via different technical controls.

Means of Control over Azure based Resources

Resources deployed in Azure can be controlled via the following mechanisms.

1. Administration of Azure based resources via Azure Control Plane

Azure Control Plane is the de facto access mechanism to control anything in Azure. All Azure AD accounts with administrative roles must be protected to reduce the likelihood of compromise of the customer environment.

- What is controlled: All Azure resources, such as Azure AD, Resource Groups, RBAC, Networking, VM resources, Encryption, SQL DBs, ie any resource in Azure

- Tools used on the client side:

- Browser,

- PowerShell,

- Cross Platform CLI,

- Visual Studio,

- VSTS,

- Visual Code,

- GitHub and many other

- Azure technologies and controls used for administration:

- Account resets,

- ARM templates,

- Azure DSC,

- Azure Automation and other

- Accounts used for administration:

- Azure AD account with assigned RBAC permission to accomplish the task

- Account can be cloud based only or being synchronized from on-premises ADDS

- Main type of accounts with admin access:

- Account Admins in Enterprise Portal

- Tenant level Admin roles:

- Global Admins in Azure Tenant

- Other AAD Roles

- Subscription level Admin roles:

- Owner

- Service Admin and Co-Admin (access to the legacy portal)

- User Access Administrator

- Accounts with RBAC roles assigned at Subscription level, resource group level or resource level

- Means of Control over the resources in Azure:

- Direct via AAD account via assigned permissions on Azure resource

- Indirect via one of the Azure controls that has ability to modify Azure resource or the state of the compute resource (like VM)

It is important to understand that Azure control plane can be used not only to configure Azure resource settings as it relates to Azure, it is also used to configure and manage internal settings and applications on the resource itself. For example, it can be used to configure VM with specific roles and applications and then ensure that this VM is configured exactly as needed, all without ever logging into this VM via traditional remote access mechanisms.

The following diagram shows different means of control over Azure via Azure Control Plane:

2. RDP, SSH or remote management into target VM

Console based access or access via remote management tools is the classic way to manage most operating systems.

This can be done via one of the following mechanisms:

- Connect to the target VM via direct connection, such as ExpressRoute, Site-Site VPN, Point-Site VPN. It requires a routed connection from the client to the target VM

- Connect via Jump Server VM

- Connect via Jump Server VM configured with VM JIT

- Accounts used for administration:

- Operating System based account, such as Windows local accounts or AD DS based domain accounts

- AuthZ within VM is done via OS based authorization model

3. Manage via tools like project “Honolulu”

- What it is: Project “Honolulu” is the code name for new set of remote management tools which use Browser as its client.

- Management of the target system is done via one of the following:

- Direct connection from the client to the target system, with OS based account

- Connection via Web Server Management Proxy that has direct connection to the target systems, authN is done via OS based account

- https://aka.ms/ProjectHonolulu

Behind all of these “means of control” mechanisms are identities (user accounts) which must be properly secured. In the next post we’ll look at current administrative practices and identify where they fall short.